Published on 24 de January 2026

How to Improve Your Website’s SEO and Rank Better in Search Engines and ChatGPT

Discover how SEO has evolved, what search engines and ChatGPT have in common, and how to optimize your website to rank better today and in the future.

Improving a website’s SEO is no longer just about “adding keywords.” For years, that was the dominant idea, and for a while it worked. Today, however, rankings depend on something much broader: your site must be crawlable, understandable, trustworthy, and technically healthy—both for traditional search engines and for new artificial intelligence systems such as ChatGPT.

If your website has broken links, indexation errors, or structural issues, it doesn’t matter how good your content is. It will not rank as it should. And increasingly, it will also fail to be a reliable source for systems that generate answers based on information available on the web.

SEO has not disappeared... what it has done is evolve.

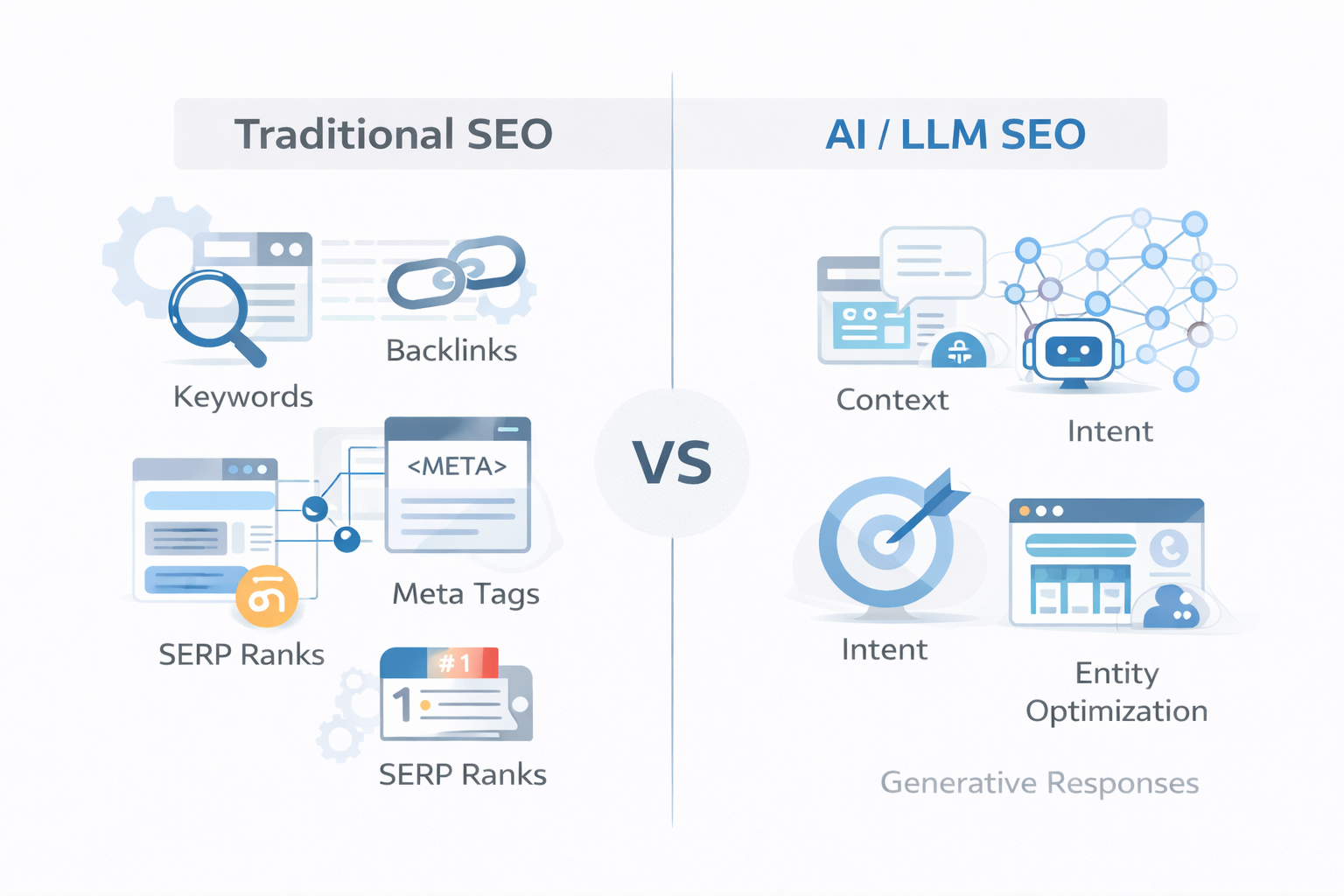

From Keywords to SEO as a System

In the early days of SEO, optimizing a website was a relatively simple task. Repeating a keyword, placing it in the title, adding a few basic tags, and acquiring some links was often enough. Algorithms were simple, and the room for manipulation was high.

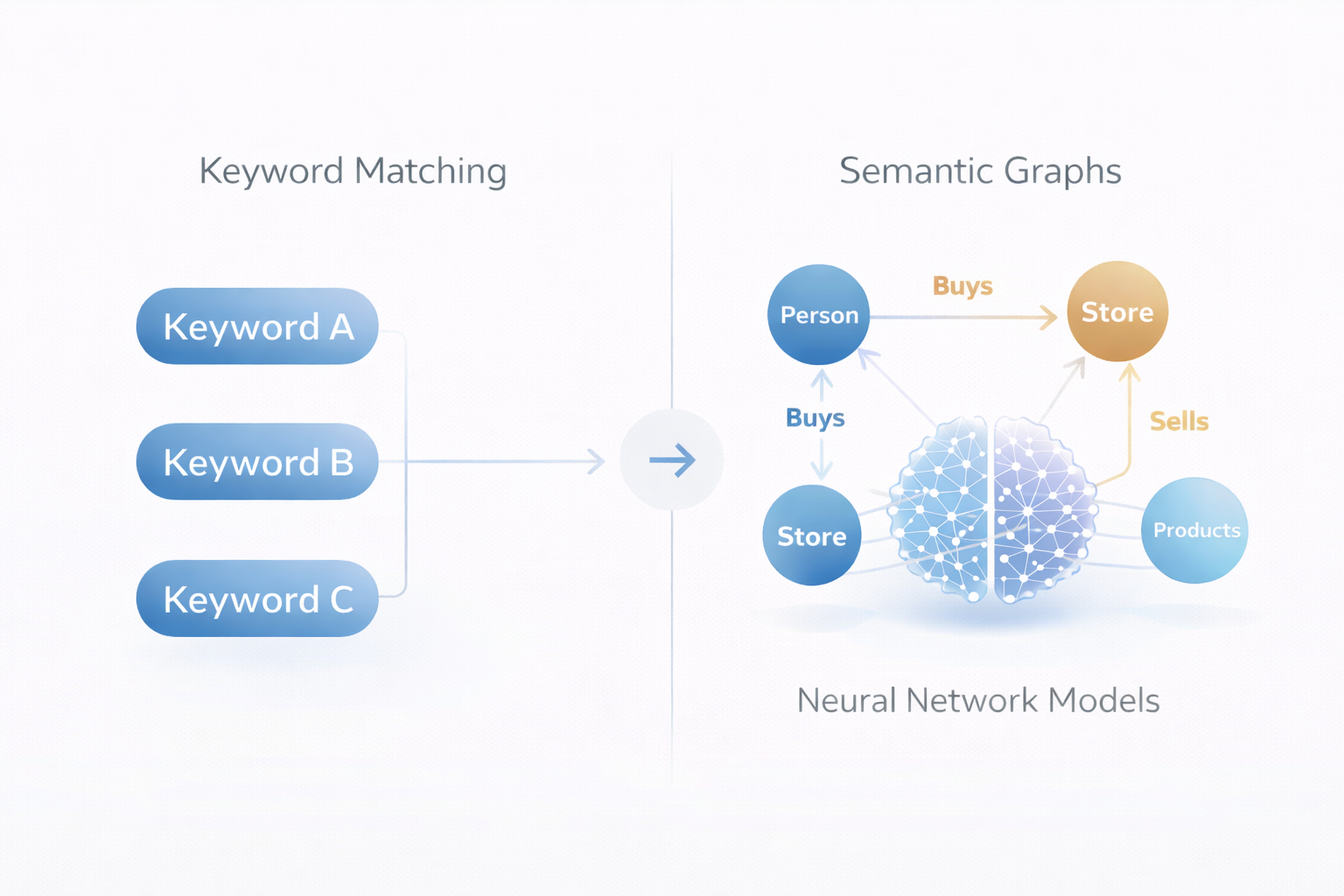

Over time—especially with the evolution of Google—ranking systems have become far more complex. Today, hundreds of factors are involved in determining a page’s visibility: real content quality, domain authority, internal linking, user experience, technical performance, semantic structure, and trust signals.

SEO stopped being a collection of isolated tricks and became a system of interconnected signals.

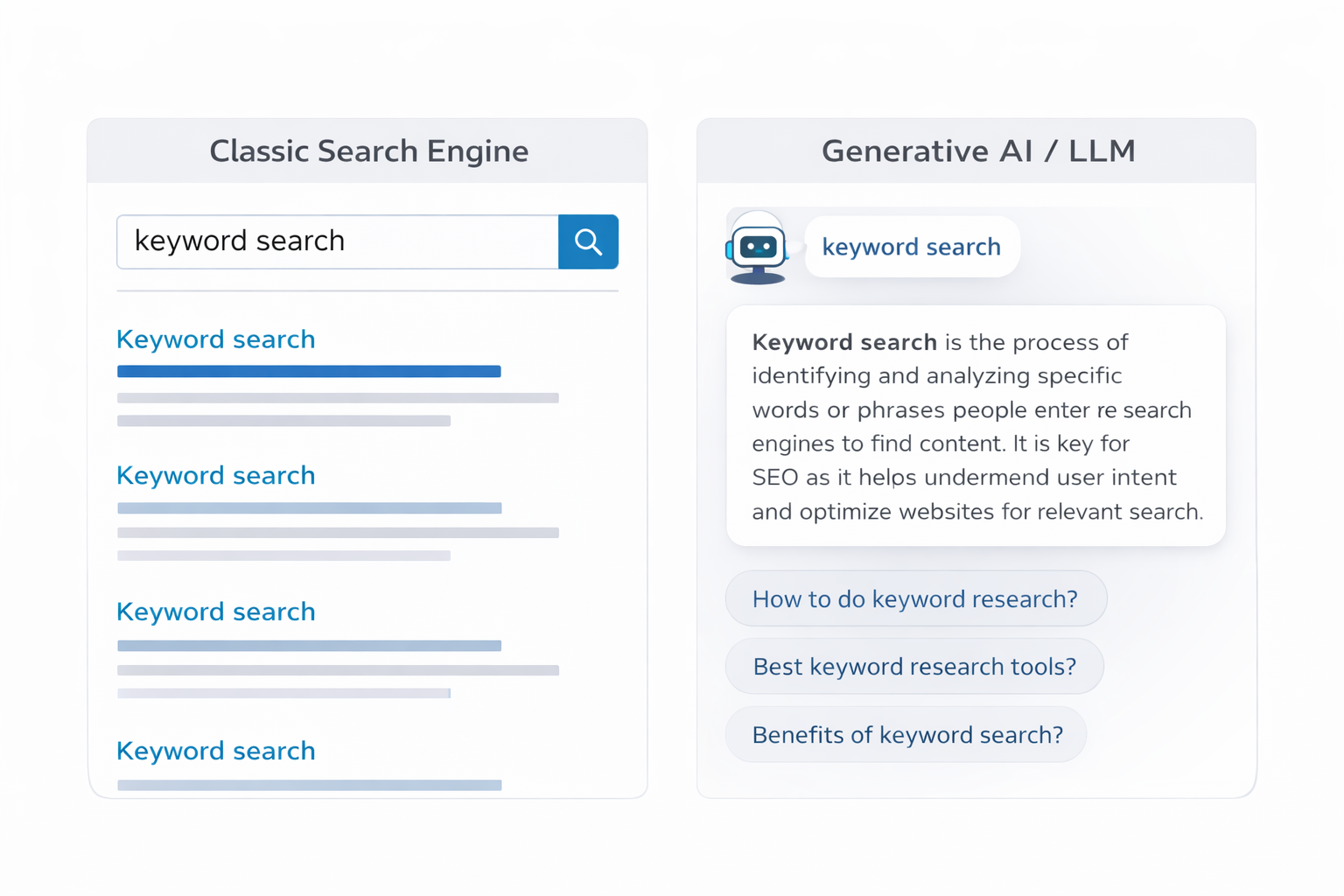

The Impact of LLMs and ChatGPT on Modern SEO

The arrival of large language models has changed how information is consumed, but it has not invalidated SEO. On the contrary, it has reinforced it.

ChatGPT and other LLMs are trained on millions of documents, rely on information that already ranks in search engines, and tend to trust sources that show clear signals of authority, structure, and reliability. They do not operate outside the web ecosystem—they are built on top of it.

This leads to a key idea:

SEO that performs well in search engines is, to a large extent, the SEO that AI systems understand and reuse.

We are not talking about two different disciplines, but about the same language interpreted by different systems.

What Search Engines and AI Systems Need to Understand Your Website

For a website to perform well in this new context, it needs a solid shared foundation: clear architecture, accessible pages, the absence of technical errors, semantic consistency, and strong authority signals.

If a URL cannot be crawled or indexed correctly, it simply does not exist—neither for traditional SEO nor for AI systems that depend on search engine data.

Concepts such as technical SEO, semantic SEO, domain authority, rich snippets, EEAT, and internal linking are no longer optional. They are the foundation on which any sustainable ranking strategy is built.

The Invisible Errors Holding Your Website Back

This is where many projects fall short. They have content, and sometimes even a well-defined strategy, but they carry technical issues that limit growth without being obvious at first glance.

In practice, improving your website’s SEO means controlling and optimizing very specific elements that directly influence how search engines and AI systems interpret your site, such as:

-

URLs returning 4xx or 5xx errors, which break crawling and waste internal authority.

-

Poorly managed 3xx redirects, especially unnecessary redirect chains that dilute SEO signals.

-

Important pages with indexation issues, caused by unintended noindex tags, technical blocks, or incorrect canonical tags.

-

Missing or duplicated titles and meta descriptions, which reduce relevance and CTR.

-

Incorrect canonical tags, leading to duplication and keyword cannibalization.

-

Missing or poorly implemented H1 tags, often a symptom of template and hierarchy problems.

These are not minor details. They are structural factors that directly affect a website’s ability to rank well.

Optimizing Your Website for Present and Future SEO

Optimizing a website today means accepting that rankings are not achieved solely by creating new content, but by ensuring that everything already published is correctly structured, accessible, and aligned with the criteria used by search engines and AI systems.

Technical SEO stops being a one-time audit and becomes a continuous improvement process. Every error fixed strengthens site authority. Every indexation issue resolved expands the real reach of your content. Every structural optimization makes semantic interpretation easier.

The Role of SDR Crawl in This New SEO Landscape

In this context, SDR Crawl is not the protagonist of SEO, but the ally that makes it visible. Its value lies in helping identify technical errors and optimizations that have a direct impact on visibility—issues that are often invisible without a global view of the site.

By detecting and prioritizing these critical points, SDR Crawl helps align a website with the shared principles of SEO past, present, and future: structure, accessibility, authority, and consistency.

The SEO that works today does not replace the old one—it builds on it. Keywords still matter. Structure still matters. Authority still matters. The difference is that now everything must be connected, clean, and technically sound.

Improving your website’s SEO is no longer about applying isolated tricks.

It is about building sites that deserve to be understood, recommended, and ranked—both by search engines and by the artificial intelligence systems that rely on them.

That is the true starting point of the SEO that is coming.